Last Updated on September 24, 2021 by Asif Iqbal Shaik

A lot of new TVs have the HDR feature, and brands are making sure that they use HDR in their marketing material. Earlier, the feature was limited to just high-end 4K TVs, but of late, even cheaper-priced TVs come with the HDR feature. But what exactly is HDR and how does it help in improving the TV’s picture quality? Very few consumers are actually aware of how HDR works and if HDR works as well on affordable TVs as it does on premium TVs. In this article, we will explain how HDR works in TVs, what are different HDR standards and how they differ from each other.

What is HDR in TVs?

Subscribe to Onsitego

Get the latest technology news, reviews, and opinions on tech products right into your inboxHDR stands for High Dynamic Range. It is among the greatest improvements TVs have seen in a long time. TVs with HDR can display deeper colours, a wider colour gamut, and can display much higher brightness. All of this results in more immersive video quality compared to SDR (Standard Dynamic Range) TVs.

However, not all TVs with HDR perform equally. Usually, higher-end TVs have better HDR performance than lower-end TVs. Moreover, there are various standards for HDR: HDR10, HDR10+, Dolby Vision, and HLG (Hybrid Log-Gamma). So, it is easy to get lost in the world of HDR, which makes it harder to choose a good TV.

What Are The Advantages Of HDR on TVs?

As mentioned earlier, HDR offers a wider range of colours, higher brightness, and a life-like dynamic range.

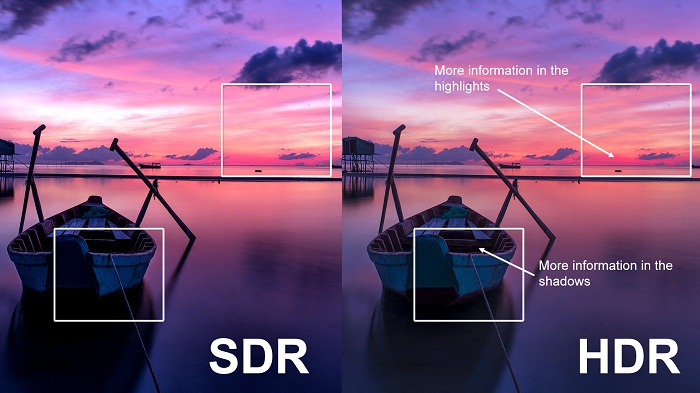

1. Wider Dynamic Range

HDR TVs can show bright and dark parts of the image without ruining details. For example, take a complex scene such as a sunset. As you can see in the image below, an SDR TV won’t be able to show the scene without either crushing the shadows (darker parts of the image) or blowing out the highlights (brighter parts of the image). The SDR TV on the left blows out the sun’s outline, while the HDR TV on the right contains the sun in a much better way. SDR TVs usually have 6-bit or 8-bit displays, while HDR TVs usually have 10-bit or 12-bit displays.

An HDR TV’s higher-quality panel (compared to an SDR TV’s lower-quality screen panel) allows it to display higher brightness and a broader range of brightness stops. It means that the TV can show both the bright sun and the shadows in the scene without ruining the details or colours of both parts of the image.

2. Higher Brightness

HDR TVs can theoretically produce higher brightness levels, which means they can reproduce scenes closer to how they would appear in real life. For example, for a scene with a bright sun, HDR TVs can increase the brightness level of the display to extremely high levels, similar to how our eyes would see it in real life. Theoretically, this means that your eyes will see similar levels of brightness through the TV as they would while watching the scene in real life.

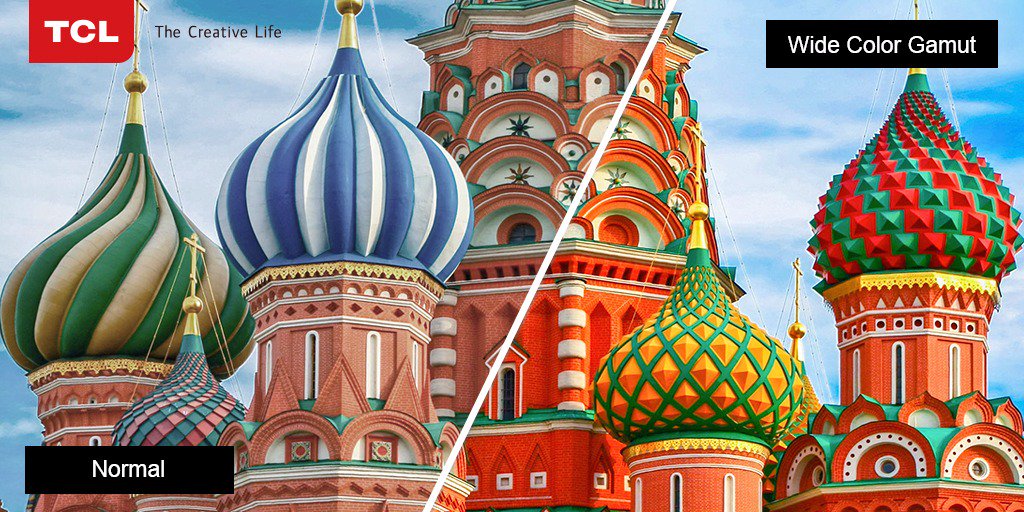

3. Wider Colour Gamut

TVs with WCG (Wide Colour gamut) can display more colours than a TV without WCG. For example, a TV with WCG can display more shades of red when compared to a TV with a standard colour gamut (SCG). Mostly, WCG-compatible TVs can show deeper greens and reds compared to TVs without WCG. As you can see in the image below, Wide Colour Gamut has deeper colours than Standard Colour Gamut.

While WCG is not a requirement for an HDR, the feature goes hand-in-hand with HDR. A TV with HDR and WCG can show various types of scenes in a more lifelike and immersive way.

What is HDR Metadata?

An HDR scene comes with metadata. Metadata is a set of information that helps a display device to show the content in an optimal manner. Metadata consists of RGB colour primaries, white point, brightness range, MaxCLL (Maximum Content Light Level), and MaxFALL (Maximum Frame-Average Light Level).

There are two types of HDR metadata: Static and Dynamic. Static HDR metadata means that colour range and brightness levels remain the same for all the scenes and frames in a video file. The tone mapping of the whole video is constant. However, in real life, every scene might have different colour ranges and brightness levels. That’s where Dynamic HDR metadata comes in. Tone mapping in videos with dynamic HDR metadata changes on a scene-by-scene or frame-by-frame basis, which accurately depicts real-life scenarios.

Do All HDR TVs Perform Similarly? What Are The Differences Between Low-End HDR TV and High-End HDR TV?

No, not all HDR TVs perform similarly. For a perfect HDR experience, a TV would need to feature high brightness levels (1000 nits or more), a wide colour gamut, wider dynamic range (HDR10, HDR10+, Dolby Vision, or HLG), and precise local dimming. Entry-level HDR TVs often lack most of these features.

For example, an HDR TV that costs ₹50,000 might use a display panel that can only go as high as 400 nits of peak brightness. And when an HDR scene directs the TV to display a frame with 800 nits brightness, the TV can’t attain such high brightness levels, and it only maxes out at 400 nits. That means the TV can’t reproduce the scene as faithfully as the director intended to. Some TVs might be able to reach up to 1000 nits of peak brightness but won’t be able to sustain it for longer periods of time, which means it might be able to faithfully reproduce an HDR scene for a few minutes or seconds, but it gradually drops the brightness and won’t be able to show HDR scenes as well as it did a few minutes ago.

Most affordable HDR TVs also lack WCG and precise local dimming, which means they can’t reproduce HDR scenes, as well as high-end HDR TVs, can. Some TVs only support HDR10 or HDR10+ standards, and if a movie is mastered in Dolby Vision, the TV won’t support it. Hence, while you are buying an HDR TV, check out the HDR standards it supports, peak brightness levels, sustained brightness levels, whether it supports a wide colour gamut, and how many dimming zones it has.

HDR Standards: HDR10 vs HDR10+ vs Dolby Vision vs HLG

There are four primary HDR standards—HDR10, HDR10+, Dolby Vision, and HLG—that TVs adopt, and the most common of them is HDR10. Let us look at all of them and how each of them treats an HDR scene and controls an HDR TV.

1. HDR10

It is a royalty-free HDR standard, which means any TV (or any type of screen) manufacturer can adopt the technology without paying a licensing fee. However, it has a lower set of requirements when compared to HDR10+ and Dolby Vision. It means that TV brands can set tone mapping as per their preference.

HDR10 features static metadata, which means the entire video uses the same tone mapping, which might not be an accurate representation of the actual scene. Almost all HDR TVs are compatible with HDR10 because it is the cheapest and easiest to implement.

2. HDR10+

HDR10+ is a royalty-free HDR standard as well, similar to HDR10, but it features dynamic metadata. This means that it allows scene-by-scene and frame-by-frame tone mapping, rendering scenes more faithfully when compared to static metadata.

The HDR10+ standard is developed by Samsung. Various TV brands, production houses, and video streaming apps currently support HDR10+. It is slightly harder to implement and requires better screen panels and processors to reproduce HDR10+ scenes.

3. Dolby Vision

The Dolby Vision standard is developed by Dolby. It is similar to HDR10+, which means it features dynamic HDR metadata. However, it is a proprietary (not royalty-free) HDR standard, and it comes with a set of stricter rules and performance metrics. Hence, Dolby Vision is the most challenging (and costliest) HDR standard to implement, and very few TVs are Dolby Vision-certified.

Although there are relatively fewer Dolby Vision videos out there, and very few TVs and streaming apps currently support it, its support is slowly growing. TVs and video streaming devices from Amazon, Apple, Google, HiSense, LG, Sony, TCL, and Toshiba currently support Dolby Vision. Video streaming and distribution brands like Apple, Disney+, and VUDU currently support Dolby Vision. A few smartphones from Apple, LG, and Sony have support for Dolby Vision.

4. HLG (Hybrid Log Gamma)

HLG is currently supported by most HDR TV manufacturers, but it is primarily intended to be used for broadcast cable, satellite and live TV channels. HLG simplifies things by combining SDR and HDR metadata into the same signal. This means that all TVs (SDR and HDR) can use the same HLG signal. SDR TVs don’t use the HDR signal. If a TV supports HDR, it uses the HDR metadata from the HLG signal to display HDR content. However, due to its simplicity, its performance is not as good as HDR10+ and Dolby Vision.

| HDR10 | HDR10+ | Dolby Vision | |

| Licensing | Royalty-Free | Royalty-Free | Proprietary, License |

| Bit Depth | 10-Bit | 10-Bit | 12-Bit |

| Peak Brightness | 4,000 Nits | 4,000 Nits | 10,000 Nits |

| Metadata | Static | Dynamic | Dynamic |

| Tone Mapping | Varies By TV Brand | Better | Best |

| Content Availability | Best | Decent | Limited, But Growing |

Conclusion: HDR10 vs HDR10+ vs Dolby Vision vs HLG

Dolby Vision is the most advanced HDR format right now in terms of technical superiority and performance metrics. However, there’s not a lot of Dolby Vision mastered HDR content out there, but support for Dolby Vision is growing, with brands like Apple and LG throwing their weight behind it.

HDR10 is the most common HDR format, and it has the most content available right now. Almost all HDR-capable TVs, monitors, projectors, media streaming devices, set-top boxes, graphics cards, laptops, gaming consoles, and video streaming services support HDR, but its performance isn’t as good as HDR10+ or Dolby Vision.

HDR10+ is technically almost as good as Dolby Vision, and it is royalty-free, too. However, there is minimal content out there that is mastered in HDR10+. Right now, it is primarily supported by Samsung and Amazon (Prime Video). Some HiSense and Vizio TVs support it, too. No gaming console supports HDR10+, and only Samsung smartphones are compatible with HDR10+.

Things To Look Out While Buying An HDR TV

If you are out in the market, looking to buy an HDR TV, make sure that it supports as many HDR formats as possible. Also, you should prefer TVs that have higher local dimming zones and support for wide colour gamut. But it is understandable if affordable TVs don’t support either one or both those features.

Here are the things you should look out for when buying an HDR TV:

- As many HDR standards as possible: HDR10, HDR10+, HLG, and Dolby Vision

- Lower-end HDR TVs should have at least 400 nits of peak brightness and 350 nits of sustained brightness. They should have support for HDR10 and HLG.

- Mid-range HDR TVs should have at least 600 nits of peak brightness and 450 nits of sustained brightness. They should also have support for local dimming zones and WCG (wide colour gamut). They should have support for HDR10, HDR10+ or Dolby Vision, and HLG.

- High-end HDR TVs should have 1,000 nits (or higher) peak brightness and 600 nits of sustained brightness. They should also have 32 or more local dimming zones and WCG (wide colour gamut). Such TVs should support HDR10, HDR10+ or Dolby Vision, and HLG.

Discussion about this post